First, let me apologize for that somewhat misleading headline. The Horus wearable is fascinating, capable of improving the blind’s experience of the world, but it won’t give them back their eyes. It will, however, convert their world into an exposition of sorts. This requires some explaining.

The Italian start-up behind Horus is Eyra technology. What they’ve created, is a working prototype that functions as the eyes of a blind person. Those artificial eyes are able to assist that person navigating the world, but also with reading non-braille text and even recognizing faces.

At the moment, they are still testing their latest prototype in Italy, but they will expand to English-speaking countries in late 2017.

Horus

Like a friend in your ear, Horus transcribes the physical world into spoken words. Rather than channeling the sound through your eardrums, blocking out otherwise important ambient sound like sirens or phones ringing, Horus uses bone conduction technology.

Bone conduction transmits sounds through your skull, circumventing your ear canal, so you still hear the world around you.

To read about sexy bone conducting sunglasses, read Zungle Panthers Thump All Other Headphone-sunglass Combos

The device wraps around the back of your head, connecting to the “brain” of Horus via a cord. Horus has loads of sensors and cameras, so it can not only “see” the world, it can detect aspects not visible to a lens.

A 2D camera cannot sense depth, so avoiding low hanging tree limbs may be tough without something else. We’ll come back to what that is…

Deep learning

What puts the cherry on top with Horus is the use of deep learning. It’s a tough subject to understand beyond the conceptual aspects, at least for this author.

Deep learning is next-level machine learning, where the learning capacity of an application draws deeper assumptions about data. Applications that utilize machine learning, build relationships with information over time, closer to the way your brain works.

For example, your virtual assistant, Siri or Alexa, can learn your name because you entered that information. That is very simple machine learning.

They cannot derive more from that info, just re-use it like they know your name. It has no meaning to them.

How exactly Horus goes further is beyond the education of this writer, but it has to do with applying those data as building blocks to better interpret new data points.

Navigation

Unless you can echolocate the surrounding world, if you’re blind, you’ll need some sort of sensory input to not run into things.

If you’re reading this, you take for granted that your day is full of dodging corners, tree branches, pets and other people. Despite a network of sidewalks, the world is a complicated obstacle course.

Our eyes make navigation a snap. Horus uses stereoscopic sound to create a 3D map of environments. With this data, using that machine learning thing we covered, it can advise you how to get around without harm.

Reading

While there are elements of our written world that are in braille, not everything is readable by the blind. Not every blind person reads braille.

We surmise, with technologies like Horus, we may see the end of the braille form. Horus uses its cameras to read and convert text into spoken words. You need only aim your face at whatever you wish to read.

Presumably, Horus could also help you identify when there is something you may wish to read. In obvious situations like holding up a food box to read the nutrition contents, it may be easy for Horus to know your desires.

What it may not know is when you need to read a sign above your head, something a person with normal sight would recognize. This is why Eyra is testing their device with blind people right now.

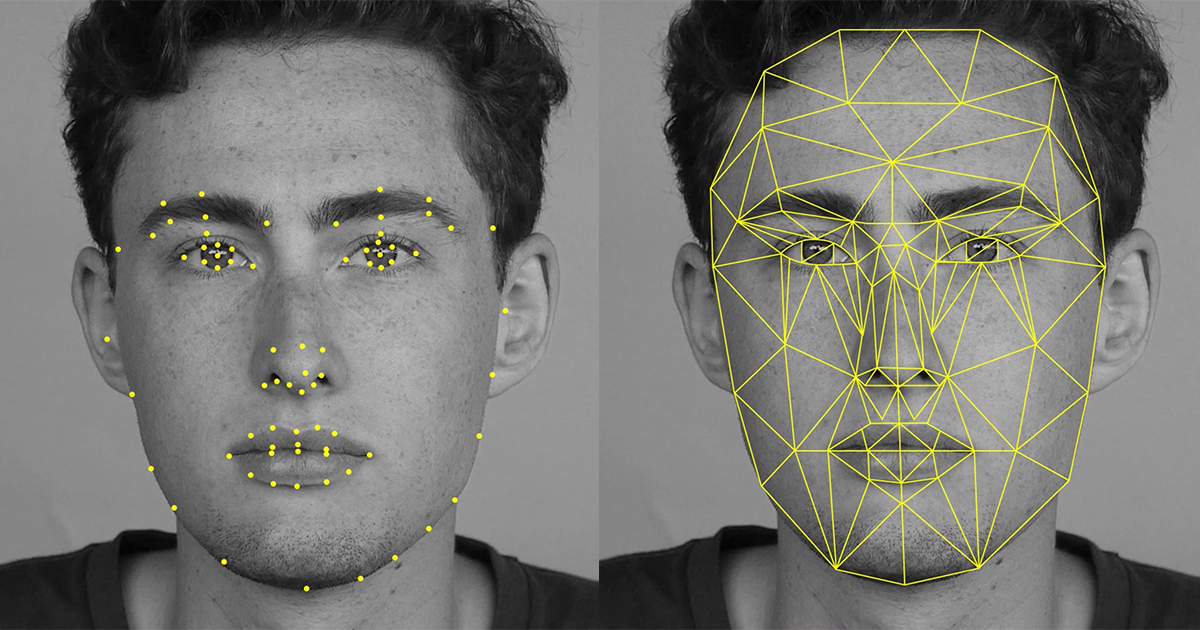

Facial Recognition

Something we take for granted as normal-sighted folks, we make assumptions about someone on the street with a glance.

We recognize if we know them, but we also know if they may have nefarious intentions. Someone who hides his face may give us pause to walk a different direction.

We’re constantly reading subtleties on other’s faces to make assumptions about them.

Horus will help the blind recognize familiar faces, but also help them ascribe a name or value to those faces right away.

What would be super cool is if that technology could expand to detect conflicts in vocal inflection and eye movement.

This idea is still in the development stage, but the Eyra team is close to a market-ready product. Centralizing the learnings of all devices via a network would make the most sense, so devices could share their machine learning worldwide.

Until we cure all forms of sight loss, it’s comforting to know that wearables will bridge the gap.

For more on Horus, head over to Eyra’s site. Meanwhile, enjoy this video: